Amazon Web Services vs Google Cloud Platform

August 19, 2023 | Author: Michael Stromann

26

Access a reliable, on-demand infrastructure to power your applications, from hosted internal applications to SaaS offerings. Scale to meet your application demands, whether one server or a large cluster. Leverage scalable database solutions. Utilize cost-effective solutions for storing and retrieving any amount of data, any time, anywhere.

16

Google Cloud Platform is a set of modular cloud-based services that allow you to create anything from simple websites to complex applications. Cloud Platform provides the building blocks so you can quickly develop everything from simple websites to complex applications. Explore how you can make Cloud Platform work for you.

Amazon Web Services (AWS) and Google Cloud Platform (GCP) are two leading cloud computing providers, offering a wide range of services and solutions. AWS has been in the market longer and has a more extensive portfolio of services, including computing, storage, networking, databases, AI/ML, and more. It provides highly scalable and flexible infrastructure that caters to the needs of businesses of all sizes. On the other hand, GCP focuses on innovation and offers a powerful set of cloud services, particularly in the areas of data analytics, AI/ML, and serverless computing. GCP is known for its data processing capabilities, machine learning tools, and its emphasis on containerization with Kubernetes. Both AWS and GCP provide global coverage, strong security features, and pay-as-you-go pricing models.

See also: Top 10 Public Cloud Platforms

See also: Top 10 Public Cloud Platforms

Amazon Web Services vs Google Cloud Platform in our news:

2024. Google injects generative AI into its cloud security tools

Google has rolled out a suite of new cloud-based security offerings alongside updates to its existing lineup, targeting enterprises handling extensive, multi-tenant networks. Among these introductions is Gemini in Threat Intelligence, a fresh addition to Google's Mandiant cybersecurity platform, harnessing Gemini's capabilities. Currently available for public preview, Gemini in Threat Intelligence empowers users to analyze significant volumes of potentially malicious code, conduct natural language searches for ongoing threats or signs of compromise, and distill insights from open source intelligence reports across the internet. And in Security Command Center, Google’s enterprise cybersecurity and risk management suite, a new Gemini-driven feature lets security teams search for threats using natural language while providing summaries of misconfigurations, vulnerabilities and possible attack paths.

2022. Google Cloud will shutter its IoT Core service next year

This week, Google Cloud made the announcement that it will be discontinuing its IoT Core service, allowing customers a one-year timeframe to transition to a partner for the management of their IoT devices. Google believes that relying on partners to handle the process on behalf of customers is a more effective approach. A Google spokesperson explained, "Since the launch of IoT Core, it has become evident that our customers' needs can be better met by our network of partners who specialize in IoT applications and services. We have diligently worked to offer customers migration options and alternative solutions, and we are providing a year-long transition period before discontinuing IoT Core."

2022. Google expands Vertex, its managed AI service, with new features

Roughly one year ago, Google introduced Vertex AI, a managed AI platform designed to expedite the deployment of AI models for businesses. Today, Google has announced upcoming enhancements for Vertex, including a dedicated server for AI system training and the introduction of "example-based" explanations. As Google has consistently emphasized, Vertex offers the advantage of integrating Google Cloud services for AI within a unified user interface (UI) and application programming interface (API). According to Google, notable customers such as Ford, Seagate, Wayfair, Cashapp, Cruise, and Lowe's utilize Vertex to construct, train, and deploy machine learning models within a single environment, effectively transitioning from experimental stages to production.

2020. AWS launches Amazon AppFlow, its new SaaS integration service

AWS has recently launched Amazon AppFlow, an integration service designed to simplify data transfer between AWS and popular SaaS applications such as Google Analytics, Marketo, Salesforce, ServiceNow, Slack, Snowflake, and Zendesk. Similar to competing services like Microsoft Azure's Power Automate, developers can configure AppFlow to trigger data transfers based on specific events, predetermined schedules, or on-demand requests. Unlike some competitors, AWS positions AppFlow primarily as a data transfer service rather than an automation workflow tool. While the data flow can be bidirectional, AWS's emphasis is on moving data from SaaS applications to other AWS services for further analysis. To facilitate this, AppFlow includes various tools for data transformation as it passes through the service.

2019. Google Cloud gets a new family of cheaper general-purpose compute instances

Google Cloud has recently introduced its new E2 family of compute instances, specifically designed for general-purpose workloads. These instances offer a significant cost advantage, delivering savings of approximately 31% when compared to the existing N1 general-purpose instances. Moreover, the new system incorporates enhanced intelligence in terms of VM placement, granting the flexibility to migrate them to alternative hosts as required. To achieve these advancements, Google has developed a custom CPU scheduler. Unlike comparable alternatives offered by other cloud providers, E2 VMs from Google can sustain high CPU loads without artificial throttling or complex pricing structures. It will be intriguing to witness benchmark tests that compare the performance of the E2 family against similar offerings from AWS and Azure.

2019. AWS launches fully-managed backup service for business

Amazon's cloud platform, AWS, has introduced a new service called Backup, allowing companies to securely back up their data from various AWS services as well as their on-premises applications. For on-premises data backup, businesses can utilize the AWS Storage Gateway. This service enables users to define backup policies and retention periods according to their specific requirements. It includes options such as transferring backups to cold storage for EFS data or deleting them entirely after a specified duration. By default, the data is stored in Amazon S3 buckets. While most of the supported services already offer snapshot creation capabilities (except for EFS file systems), Backup automates this process and adds customizable rules to enhance data protection. Notably, the pricing for Backup aligns with the costs associated with using the snapshot features (except for file system backup, which incurs a per-GB charge).

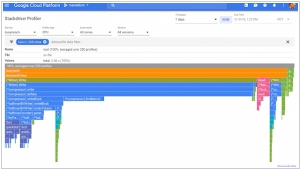

2018. Google Cloud adds new applications performance monitoring tool

Google has introduced a significant addition for developers working on applications within the Google Cloud Platform. They now have access to a comprehensive suite of application performance management tools known as Stackdriver APM. This suite empowers developers to directly track and address issues within the applications they have built, eliminating the need to rely solely on operations teams. The underlying idea is that developers, being intimately familiar with the code, are best positioned to comprehend the signals emanating from it. Stackdriver APM consists of three primary tools: Profiler, Trace, and Debugger. While Trace and Debugger were already available, the integration of Profiler allows all three tools to seamlessly collaborate in identifying, monitoring, and resolving code-related issues.

2018. Google Compute Engine adds simple machine learning service

Google has introduced AutoML, a groundbreaking service available on Google Compute Engine that empowers developers, even those without prior machine learning (ML) expertise, to construct personalized models for image recognition. The scarcity of machine learning experts and data scientists in today's market is widely acknowledged. To address this challenge, Google's new service enables virtually anyone to submit their images, upload them (and import or create tags within the application), and automatically generate a custom machine learning model using Google's advanced systems. The entire process, from data importation to tagging and model training, is facilitated through a user-friendly drag and drop interface. It's important to note that this service goes beyond the capabilities of Microsoft's Azure ML studio, which offers a Yahoo Pipes-like interface for model building, training, and evaluation.

2017. AWS launched browser-based IDE for cloud developers

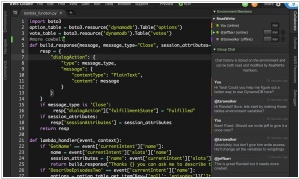

Amazon Web Services has introduced a new browser-based Integrated Development Environment (IDE) called AWS Cloud9. While it shares similarities with other IDEs and editors like Sublime Text, AWS emphasizes its collaborative editing capabilities and deep integration into the AWS ecosystem. The IDE includes built-in support for various programming languages such as JavaScript, Python, PHP, and more. Cloud9 also provides pre-installed debugging tools. AWS positions this as the first "cloud native" IDE, although competitors may contest this claim. Regardless, Cloud9 offers seamless integration with AWS, enabling developers to create cloud environments and launch new instances directly from the tool.

2017. Google Cloud Platform cuts the price of GPUs by up to 36 percent

Google is implementing a price reduction for Nvidia's Tesla GPUs on its Compute Engine service, with savings of up to 36 percent. In U.S. regions, the cost of utilizing the slightly older K80 GPUs has been reduced to $0.45 per hour, while the more advanced and powerful P100 machines will now cost $1.46 per hour, both with per-second billing. By doing so, Google aims to attract developers who seek to execute their own machine learning workloads on its cloud platform. Additionally, various other applications, such as physical simulations and molecular modeling, can greatly benefit from the extensive number of cores provided by these GPUs.