Hadoop vs Splunk

November 12, 2023 | Author: Michael Stromann

18

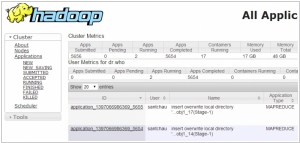

The Apache Hadoop software library is a framework that allows for the distributed processing of large data sets across clusters of computers using simple programming models. It is designed to scale up from single servers to thousands of machines, each offering local computation and storage. Rather than rely on hardware to deliver high-availability, the library itself is designed to detect and handle failures at the application layer, so delivering a highly-available service on top of a cluster of computers, each of which may be prone to failures.

55

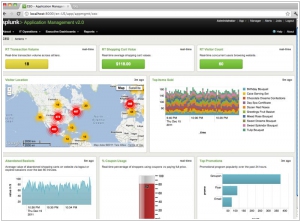

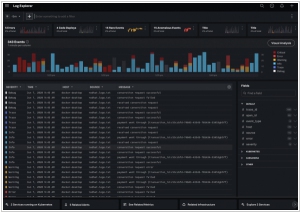

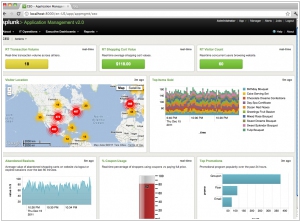

We make machine data accessible, usable and valuable to everyone—no matter where it comes from. You see servers and devices, apps and logs, traffic and clouds. We see data—everywhere. Splunk offers the leading platform for Operational Intelligence. It enables the curious to look closely at what others ignore—machine data—and find what others never see: insights that can help make your company more productive, profitable, competitive and secure.

Hadoop and Splunk are two popular tools used for managing and analyzing large volumes of data, but they have distinct differences in their approach and functionality. Hadoop is an open-source framework designed to handle big data processing and storage across distributed clusters of computers. It provides a scalable and fault-tolerant platform for processing and analyzing data using a distributed file system (HDFS) and the MapReduce programming model. Hadoop is typically used for batch processing and is highly customizable, allowing users to write their own MapReduce jobs to extract insights from data. On the other hand, Splunk is a commercial log management and analysis platform that focuses on real-time data ingestion, indexing, and searching. It provides a powerful search interface, visualization capabilities, and machine learning features to help organizations gain insights from their machine-generated data, such as log files, event streams, and metrics. Splunk excels in its ability to provide real-time monitoring and alerting, making it suitable for operational intelligence and security use cases. While Hadoop is more of a general-purpose big data processing framework, Splunk is specifically tailored for log analysis and operational data intelligence.

See also: Top 10 IT Monitoring software

See also: Top 10 IT Monitoring software

Hadoop vs Splunk in our news:

2023. Cisco to acquire IT Monitoring giant Splunk for $28B

Cisco has announced that it is acquiring Splunk for $28 billion. This acquisition is strategically aligned with Cisco's security-focused business, as it gains access to Splunk's observability platform. This addition will enable Cisco to enhance its ability to assist customers in comprehending security threats while also providing valuable capabilities for analyzing extensive log data to address various challenges such as understanding system failures and troubleshooting a wide range of issues across enterprise systems. It's important to note that both company boards have already given their approval for the acquisition. However, it must undergo regulatory approval, which is not guaranteed due to the heightened scrutiny that such deals are encountering worldwide.

2020. Splunk acquires network observability service Flowmill

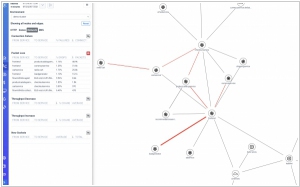

Data platform Splunk continues its acquisition streak as it expands its newly launched observability platform. Following the recent acquisitions of Plumbr and Rigor, the company has now announced the acquisition of Flowmill, a network observability startup based in Palo Alto. Flowmill specializes in helping users identify real-time network performance issues within their cloud infrastructure and offers traffic measurement by service to enable cost control. Similar to other players in this field, Flowmill leverages eBPF, a Linux kernel feature that allows the execution of sandboxed code without the need for kernel modification or loading kernel modules. This capability makes it particularly well-suited for application monitoring.

2020. Splunk acquires Plumbr and Rigor to build out its observability platform

Data platform Splunk has recently made two acquisitions, namely Plumbr and Rigor, in order to enhance its newly launched Observability Suite. Plumbr specializes in application performance monitoring, while Rigor focuses on digital experience monitoring. Through synthetic monitoring and optimization tools, Rigor assists businesses in optimizing their end-user experiences. These acquisitions serve as valuable additions to the technology and expertise gained by Splunk through its acquisition of SignalFx for over $1 billion last year.

2017. Splunk expands machine learning capabilities across platform

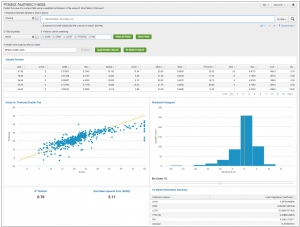

Cloud monitoring provider Splunk is bolstering its machine learning capabilities to facilitate the identification of critical data. The Splunk Machine Learning Toolkit introduces several new features specifically designed for those who prefer a do-it-yourself approach. Firstly, a new data cleaning tool has been implemented to prepare the data for modeling. Additionally, machine learning APIs have been introduced, enabling the importation of both open-source and proprietary algorithms for application within Splunk. Lastly, a machine learning management component allows for seamless integration of user permissions from Splunk into customized machine learning applications. For users seeking a more automated experience, Splunk offers new features such as Splunk ITSI 3.0. Leveraging machine learning, this tool assists in issue identification and prioritization based on the criticality of each operation to the business. These advancements empower users to derive meaningful insights from their data while tailoring the level of involvement according to their preferences.

2016. Splunk unveiled 300 machine learning algorithms for Operational Intelligence

Splunk, a leading provider of Operational Intelligence platforms, has made significant advancements in incorporating machine learning capabilities into its platform, thereby expanding its range of services and capabilities. The company has integrated machine learning at the core of its platform through the introduction of a machine learning toolkit, which can be installed as a complimentary app on top of the Splunk Enterprise platform. This toolkit offers users access to a comprehensive set of 300 machine learning algorithms, with 27 of them conveniently pre-packaged and ready to use. These algorithms cover various categories such as clustering, recommendations, regression, classification, and text analytics. Furthermore, Splunk has enhanced its machine learning functionality within the IT Service Intelligence (ITSI) platform, which was initially introduced a year ago.

2015. Splunk acquired machine learning startup Caspida

Cloud monitoring provider Splunk has recently acquired Caspida, a startup specializing in utilizing machine learning methods to detect cybersecurity threats both internally and externally. Splunk offers assistance to organizations in managing the influx of machine-generated data from their IT systems, employing data science techniques and automation to derive insights from it. Within its product portfolio, Splunk provides a security solution called Splunk App For Enterprise Security. By acquiring Caspida, Splunk enhances its security capabilities by incorporating the advanced machine learning techniques developed by Caspida. This empowers Splunk to analyze user behavior at a granular level, even for seemingly legitimate users with proper credentials. Splunk's overall approach revolves around data science-driven solutions, delivering automated threat detection and leveraging machine learning to continuously improve its capabilities over time.

2014. MapR partners with Teradata to reach enterprise customers

The last remaining independent Hadoop provider, MapR, and the prominent big data analytics provider, Teradata, have joined forces to collaborate on integrating their respective products and developing a unified go-to-market strategy. As part of this partnership, Teradata gains the ability to resell MapR software, professional services, and provide customer support. Essentially, Teradata will act as the primary interface for enterprises that utilize or aspire to use both technologies, serving as the representative for MapR. Previously, Teradata had established a close partnership with Hortonworks, but it now extends its collaboration and analytic market leadership to all three major Hadoop providers. Similarly, earlier this week, HP unveiled Vertica for SQL on Hadoop, enabling users to access and analyze data stored in any of the three primary Hadoop distributions—Hortonworks, MapR, and Cloudera.

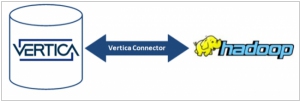

2014. HP plugs the Vertica analytics platform into Hadoop

HP has unveiled the introduction of Vertica for SQL on Hadoop, a significant announcement in the world of analytics. With Vertica, customers gain the ability to access and analyze data stored in any of the three primary Hadoop distributions: Hortonworks, MapR, and Cloudera, as well as any combination thereof. Given the uncertainty surrounding the dominance of a particular Hadoop flavor, many large companies opt to utilize all three. HP stands out as one of the pioneering vendors by asserting that "any flavor of Hadoop will do," a sentiment further reinforced by its $50 million investment in Hortonworks, which currently represents the favored Hadoop flavor within HAVEn, HP's analytics stack. HP's announcement not only emphasizes the platform's interoperability but also highlights its capabilities in dealing with data stored in diverse environments such as data lakes or enterprise data hubs. With HP Vertica, organizations gain a seamless solution for exploring and harnessing the value of data stored in the Hadoop Distributed File System (HDFS). The combination of Vertica's power, speed, and scalability with Hadoop's prowess in handling extensive data sets serves as an enticing proposition, potentially motivating hesitant managers to embrace big data initiatives confidently. HP's comprehensive offering provides a compelling avenue for organizations to unlock the potential of their data, urging them to venture beyond their reservations and embrace the world of big data.

2014. Cloudera helps to manage Hadoop on Amazon cloud

Hadoop vendor Cloudera has unveiled a new offering named Director, aimed at simplifying the management of Hadoop clusters on the Amazon Web Services (AWS) cloud. Clarke Patterson, Senior Director of Product Marketing, acknowledged the challenges faced by customers in managing Hadoop clusters while maintaining extensive capabilities. He emphasized that there is no difference between the cloud version and the on-premises version of the software. However, the Director interface has been specifically designed to be self-service, incorporating cloud-specific features like instance-tracking. This enables administrators to monitor the cost associated with each cloud instance, ensuring better cost management.

2013. Splunk launches Splunk Cloud

Splunk, the leading software platform for real-time operational intelligence, has announced the general availability of Splunk Cloud - a new service that brings Splunk Enterprise to the cloud. With Splunk Cloud, organizations can now gain visibility and operational insights into their machine-generated big data in the cloud, while also correlating this data across their cloud and on-premises environments. The introduction of Splunk Cloud for large-scale production environments expands the offerings of Splunk Storm, the cloud-based service introduced last year, which now provides free developer access to 20GB of total storage per month. Powered by Amazon Web Services, Splunk Cloud includes access to all features of the Splunk Enterprise platform, including apps, APIs, alerting, and role-based access controls.