Apache Spark vs Google BigQuery

May 26, 2023 | Author: Michael Stromann

Apache Spark and Google BigQuery are both powerful tools for processing and analyzing large-scale data, but they differ in their architecture, deployment models, and target use cases.

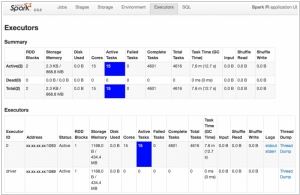

Apache Spark is an open-source big data processing framework that provides a unified analytics engine for distributed data processing. It offers a versatile set of APIs and supports multiple programming languages, making it flexible for various data processing tasks. Spark is known for its in-memory computing capabilities, which enable fast data processing and iterative algorithms. It supports a wide range of data processing operations, including batch processing, real-time streaming, machine learning, and graph processing. Spark can be deployed on clusters and integrates well with other big data tools in the Hadoop ecosystem.

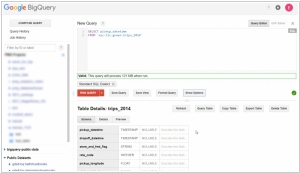

Google BigQuery, on the other hand, is a fully managed, serverless data warehouse and analytics platform offered by Google Cloud. It is designed for querying and analyzing large datasets using SQL queries, with a focus on simplicity and scalability. BigQuery utilizes a distributed architecture to handle massive volumes of data and provides fast query execution by leveraging Google's infrastructure. It supports automatic scaling, high concurrency, and built-in machine learning capabilities. BigQuery excels in handling ad-hoc queries, exploratory analysis, and business intelligence use cases.

The key differences between Apache Spark and Google BigQuery lie in their architecture, deployment models, and target use cases. Spark provides a flexible framework for distributed data processing with support for various programming languages and versatile operations. It is suitable for complex data processing tasks, machine learning, and real-time analytics. On the other hand, BigQuery is a fully managed data warehouse that offers simplicity, scalability, and SQL-based querying. It is ideal for ad-hoc analysis, business intelligence, and exploratory data analysis.

See also: Top 10 Big Data platforms

Apache Spark is an open-source big data processing framework that provides a unified analytics engine for distributed data processing. It offers a versatile set of APIs and supports multiple programming languages, making it flexible for various data processing tasks. Spark is known for its in-memory computing capabilities, which enable fast data processing and iterative algorithms. It supports a wide range of data processing operations, including batch processing, real-time streaming, machine learning, and graph processing. Spark can be deployed on clusters and integrates well with other big data tools in the Hadoop ecosystem.

Google BigQuery, on the other hand, is a fully managed, serverless data warehouse and analytics platform offered by Google Cloud. It is designed for querying and analyzing large datasets using SQL queries, with a focus on simplicity and scalability. BigQuery utilizes a distributed architecture to handle massive volumes of data and provides fast query execution by leveraging Google's infrastructure. It supports automatic scaling, high concurrency, and built-in machine learning capabilities. BigQuery excels in handling ad-hoc queries, exploratory analysis, and business intelligence use cases.

The key differences between Apache Spark and Google BigQuery lie in their architecture, deployment models, and target use cases. Spark provides a flexible framework for distributed data processing with support for various programming languages and versatile operations. It is suitable for complex data processing tasks, machine learning, and real-time analytics. On the other hand, BigQuery is a fully managed data warehouse that offers simplicity, scalability, and SQL-based querying. It is ideal for ad-hoc analysis, business intelligence, and exploratory data analysis.

See also: Top 10 Big Data platforms

Apache Spark vs Google BigQuery in our news:

2015. IBM bets on big data Apache Spark project

IBM has made a significant announcement regarding its involvement in the open source big data project Apache Spark. The company plans to allocate a team of 3,500 researchers to this initiative. Additionally, IBM has unveiled its decision to open source its own IBM SystemML machine learning technology. These strategic moves are aimed at positioning IBM as a frontrunner in the domains of big data and machine learning. Cloud, big data, analytics, and security form the pillars of IBM's transformation strategy. In conjunction with this announcement, IBM has committed to integrating Spark into its core analytics products and partnering with Databricks, the commercial entity established to support the open source Spark project. IBM's participation in these endeavors goes beyond mere altruism. By actively engaging with the open source community, IBM aims to establish itself as a trusted contributor in the realm of big data. This, in turn, enhances its credibility among companies working on big data and machine learning projects using open source tools. The collaborative involvement with the community opens doors for IBM to offer consulting services and seize other business opportunities in this space.

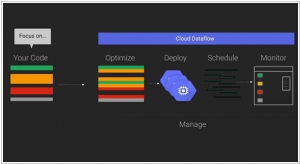

2015. Google partners with Cloudera to bring Cloud Dataflow to Apache Spark

Google has announced a collaboration with Cloudera, the Hadoop specialists, to integrate its Cloud Dataflow programming model into Apache's Spark data processing engine. By bringing Cloud Dataflow to Spark, developers gain the ability to create and monitor data processing pipelines without the need to manage the underlying data processing cluster. This service originated from Google's internal tools for processing large datasets at a massive scale on the internet. However, not all data processing tasks are identical, and sometimes it becomes necessary to run tasks in different environments such as the cloud, on-premises, or on various processing engines. With Cloud Dataflow, data analysts can utilize the same system to create pipelines, regardless of the underlying architecture they choose to deploy them on.