Amazon Web Services vs Heroku

August 19, 2023 | Author: Michael Stromann

26

Access a reliable, on-demand infrastructure to power your applications, from hosted internal applications to SaaS offerings. Scale to meet your application demands, whether one server or a large cluster. Leverage scalable database solutions. Utilize cost-effective solutions for storing and retrieving any amount of data, any time, anywhere.

Amazon Web Services (AWS) and Heroku are both popular cloud computing platforms, but they differ in their target audience, deployment models, and level of control. AWS is a comprehensive cloud platform that offers a wide range of services, including compute, storage, networking, and databases. It caters to a broad range of users, from small startups to large enterprises, providing extensive flexibility, scalability, and control over infrastructure. Heroku, on the other hand, is a Platform-as-a-Service (PaaS) that simplifies application deployment and management. It is geared towards developers and focuses on ease of use and quick deployment, abstracting away infrastructure details. Heroku offers a streamlined experience with automatic scaling and database integration, making it suitable for developers who prioritize simplicity and speed.

See also: Top 10 Public Cloud Platforms

See also: Top 10 Public Cloud Platforms

Amazon Web Services vs Heroku in our news:

2020. AWS launches Amazon AppFlow, its new SaaS integration service

AWS has recently launched Amazon AppFlow, an integration service designed to simplify data transfer between AWS and popular SaaS applications such as Google Analytics, Marketo, Salesforce, ServiceNow, Slack, Snowflake, and Zendesk. Similar to competing services like Microsoft Azure's Power Automate, developers can configure AppFlow to trigger data transfers based on specific events, predetermined schedules, or on-demand requests. Unlike some competitors, AWS positions AppFlow primarily as a data transfer service rather than an automation workflow tool. While the data flow can be bidirectional, AWS's emphasis is on moving data from SaaS applications to other AWS services for further analysis. To facilitate this, AppFlow includes various tools for data transformation as it passes through the service.

2019. AWS launches fully-managed backup service for business

Amazon's cloud platform, AWS, has introduced a new service called Backup, allowing companies to securely back up their data from various AWS services as well as their on-premises applications. For on-premises data backup, businesses can utilize the AWS Storage Gateway. This service enables users to define backup policies and retention periods according to their specific requirements. It includes options such as transferring backups to cold storage for EFS data or deleting them entirely after a specified duration. By default, the data is stored in Amazon S3 buckets. While most of the supported services already offer snapshot creation capabilities (except for EFS file systems), Backup automates this process and adds customizable rules to enhance data protection. Notably, the pricing for Backup aligns with the costs associated with using the snapshot features (except for file system backup, which incurs a per-GB charge).

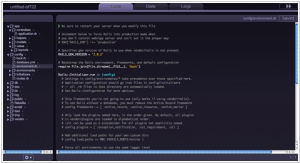

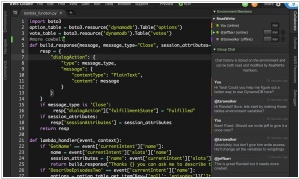

2017. AWS launched browser-based IDE for cloud developers

Amazon Web Services has introduced a new browser-based Integrated Development Environment (IDE) called AWS Cloud9. While it shares similarities with other IDEs and editors like Sublime Text, AWS emphasizes its collaborative editing capabilities and deep integration into the AWS ecosystem. The IDE includes built-in support for various programming languages such as JavaScript, Python, PHP, and more. Cloud9 also provides pre-installed debugging tools. AWS positions this as the first "cloud native" IDE, although competitors may contest this claim. Regardless, Cloud9 offers seamless integration with AWS, enabling developers to create cloud environments and launch new instances directly from the tool.

2017. AWS introduced per-second billing for EC2 instances

In recent years, several alternative cloud platforms have shifted towards more flexible billing models, primarily adopting per-minute billing. However, AWS is taking it a step further by introducing per-second billing for its Linux-based EC2 instances. This new billing model applies to on-demand, reserved, and spot instances, as well as provisioned storage for EBS volumes. Furthermore, both Amazon EMR and AWS Batch are transitioning to this per-second billing structure. It is important to note that there is a minimum charge of one minute per instance, and this change does not affect Windows machines or certain Linux distributions that have their own separate hourly charges.

2017. AWS offers a virtual machine with over 4TB of memory

Amazon's AWS has introduced its largest EC2 machine yet, the x1e.32xlarge instance, boasting an impressive 4.19TB of RAM. This represents a significant upgrade from the previous largest EC2 instance, which offered just over 2TB of memory. These machines are equipped with quad-socket Intel Xeon processors operating at 2.3 GHz, up to 25 Gbps of network bandwidth, and two 1,920GB SSDs. It is evident that only a select few applications require this level of memory capacity. Consequently, these instances have obtained certification for running SAP's HANA in-memory database and its associated tools, with SAP offering direct support for deploying these applications on these instances. It's worth mentioning that Microsoft Azure's largest memory-optimized machine currently reaches just over 2TB of RAM, while Google's maximum memory capacity caps at 416GB.

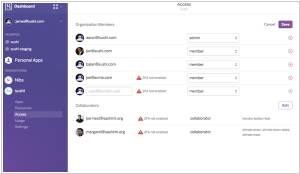

2015. Heroku launches application development platform for Enterprise

Heroku, the application development and hosting platform owned by Salesforce, has introduced a new offering known as Heroku Enterprise. This product line specifically targets large companies aiming to develop modern applications similar to those found in startups, while also providing the security features and access control often sought by enterprises. Essentially, Heroku Enterprise claims to offer the best of both worlds by enabling large enterprises to create agile-developed applications utilizing cutting-edge technologies like containers and new database services, all while adhering to the stringent governance requirements of the enterprise environment. It's an ambitious goal, and if Heroku successfully accomplishes it, they deserve commendation. With Heroku Enterprise, organizations can now effectively monitor all their developers, applications, and resources through a unified interface, streamlining their management processes.

2014. AWS now supports Docker containers

Amazon has announced the preview availability of EC2 Container Services, a new service dedicated to managing Docker containers and enhancing the support for hybrid cloud in Amazon Web Services. This offering brings forth a range of benefits, including streamlined development management, seamless portability across different environments, reduced deployment risks, smoother maintenance and management of application components, and comprehensive interoperability. It is important to note that AWS is not the first cloud provider to provide support for Docker's open-source engine. Google has recently expanded its support for Docker containers through the introduction of its Google Container Engine, which is powered by its own Kubernetes and was announced just last week during the Google Cloud Platform Live event. Furthermore, Microsoft had previously announced its support for Kubernetes in managing Docker containers in Azure back in August.

2014. Salesforce connects Heroku to its cloud

After acquiring the Heroku cloud application platform in 2010, Salesforce has finally established a connection between Heroku and Force.com through the introduction of the Heroku Connect tool. Although Heroku and Force.com are built on different development systems with distinct programming languages, Salesforce has successfully established a functional bi-directional link between them. This connection eliminates the need for extensive recoding, which can be both expensive and time-consuming, allowing Salesforce customers to effortlessly connect their Heroku apps to the Salesforce ecosystem. Salesforce recognizes the importance of showcasing tools like Heroku Connect to attract developers and assure them that Force.com seamlessly integrates with popular web toolkits like Node.js, Ruby on Rails, and Java.

2014. Amazon and Microsoft drop cloud prices

Cloud computing is witnessing a continuous decline in costs. Therefore, if you previously assessed the cost-effectiveness of migrating your IT infrastructure to the cloud and determined it to be expensive, it is worth recalculating now. Cloud platforms have significantly reduced their prices, and another round of reductions is currently underway. Starting tomorrow, the pricing for Amazon S3 cloud storage will decrease by 6-22% (depending on the amount of space used), while the cost of cloud server hard drives (Amazon EBS) will drop by 50%. Additionally, Microsoft's cloud platform, Windows Azure, will lower its prices by 20% a month later to ensure they remain slightly below Amazon's. Given these developments, it's worth reconsidering the need to invest in an in-house server when the cost of cloud services is approaching zero.

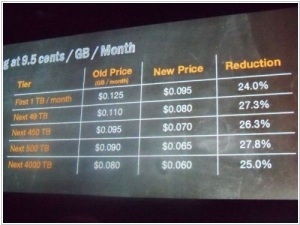

2012. Google and Amazon reduce cloud storage prices. Launch new cloud services

Competition - is good for customers. On Monday, Google reduced prices for its Google Cloud Storage by over 20%, and today, in response, Amazon has reduced prices for its S3 storage by 25%. Obviously, in the near future, Microsoft will also reduce prices for Windows Azure, to bring them to the competitive level - about $0.09/month per GB. The same story occured in March when Amazon lowered prices, and then Microsoft and Google aligned their pricing with Amazon. Because on the cloud platforms market the price is no longer a competitive advantage, but your pricing is higher than the competition - is't a big disadvantage. Some experts already doubt that Amazon and the contenders are earning something on selling gigabytes and gigahertzs. Like in case with the mobile market, the main task of cloud vendors - is to hook up large companies and SaaS-providers to their platforms, even if they should sell computing resources at a loss. ***